Addressing algorithm challenges: reduce the risk of discriminatory outcomes

As it is common knowledge the use of algorithms comes with several challenges and problems, some of which include:

A.I. Bias and Discrimination

Algorithms are often trained on historical data that may contain biases. If the training data reflects societal biases, the algorithm can perpetuate and even exacerbate these biases, leading to discriminatory unwanted outcomes.

Transparency and Accountability

Many complex algorithms, especially in machine learning, operate as “black boxes”, making it challenging to understand how they reach specific decisions. Lack of transparency can be problematic, especially in critical areas like finance, healthcare, and criminal justice.

Data Quality Issues

Algorithms depend on data, and if the data used for training is of poor quality, incomplete or unrepresentative, the algorithm’s performance can suffer.

Addressing these problems requires a combination of technological advancements, ethical considerations andlegal frameworks. A part of the solutions is for government to create a healthy legal framework, but what can companies themselves do to avoid these problems?

What can, and should, companies do?

To avoid algorithm bias, companies can take several measures to ensure fairness and reduce the risk of discriminatory outcomes. Here are some key steps:

Know the input: Diverse and Representative Training Data

Ensure that training data used to develop algorithms is diverse and representative of the population it intends to serve. This helps mitigate biases present in historical data.

Know the output: Regular Data Audits

Conduct regular audits of data used in algorithms to identify and rectify biases. This involves examining the data for underrepresented or overrepresented groups and ensuring that it reflects the real-world diversity.

Make it understandable: Transparency and Explainability

Strive for transparency in algorithmic decision-making. Ensure that algorithms are explainable and that users can understand how decisions are reached. This transparency aids in accountability and allows for external scrutiny.

Confirm and learn: User Feedback

Encourage and gather feedback from users who interact with systems driven by algorithms. This feedback loop can help identify issues and areas for improvement.

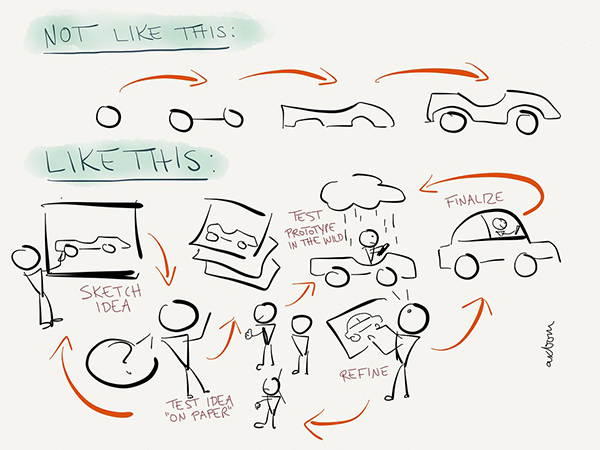

These steps probably make sense, but how to put them to practice?

Well, some of the steps, the last two, are related to human interaction and part of the User Experience of a solution.

To get the user feedback the solution must be designed in such a way that easy user feedback is possible and is part of daily usage without bothering the user too much. Also, the Transparency and Explainability is part of the UX development of the solution. The user should be able to understand what the outcome is based upon. We will elaborate on this in a future post.

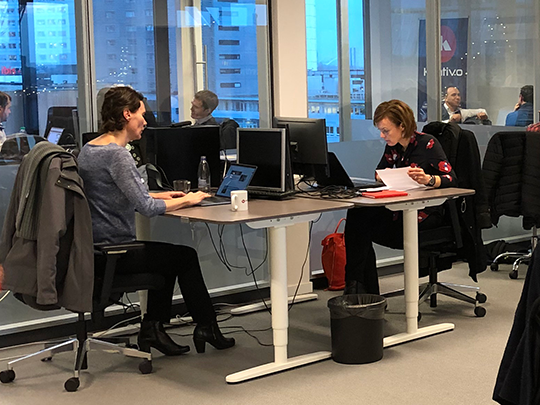

To address the other two steps, the input and the output, we have our own quality assurance editor’s desk.

Editor’s Desk: Ensuring qualitative outcomes and bias-free algorithms

Our own human (yes) Editor’s Desk oversees the development and deployment of algorithms and its outcomes.

The editors play a crucial role in curating the training data for algorithms. By selecting and verifying datasets, the Editor’s Desk helps to eliminate biases that may be present in the initial data, fostering fair and unbiased outcomes.

Through regular audits, it ensures that the algorithms adhere to the highest standards of quality and remain free from biases. This proactive approach is instrumental in safeguarding against inadvertent prejudices that might creep into automated processes.

For our Mandy based solutions our Editor’s Desk employs human tagging, a process where human experts manually label and tag data points. This human-in-the-loop approach significantly enhances the algorithm’s learning process. As humans possess nuanced understanding and contextual awareness, their input refines the algorithm, making it more adept at handling complex scenarios and avoiding misinterpretations.

By combining these strategies, companies can work toward creating algorithms that are of high quality, more equitable, transparent, and accountable. For now, bypassing the A.I. bias is on ongoing process that cannot be done without the human expert involvement.

Recent Posts

Business

Reach Account-Based Marketing perfection with AI

While Account-Based Marketing (ABM) may appear to be a natural evolution of targeted marketing, there’s much more...

A.I.

AI Agents, the logical evolution that is hyped

Agents are the latest buzzword in AI, often positioned as the next evolution beyond Generative AI (GenAI). The concept...

Company

Kentivo Group acquires Media Digitaal B.V. (MDInfo)

The Kentivo Group of companies is delighted to announce the acquisition of MediaDigitaal B.V. in Amsterdam, The...